UX of equity redesign

Bias mitigation by systems thinking

Recruiter upsell & workflow optimization

Product MVP, User onboarding, feedback loops

Build candidate trust

Decrease hiring bias

Increase application rates

Transform platform perception

In the current hiring landscape, visibility is not always an advantage. Automated résumé parsing, search algorithms, and subtle patterns of unconscious bias influence how recruiters filter and shortlist candidates. These patterns create hidden barriers for groups such as people of color, older professionals, caregivers returning to work, and applicants whose careers have followed unconventional paths. Many of these candidates now choose not to apply at all, anticipating that their profiles will be overlooked before their skills are considered. Although LinkedIn has become the default platform for professional networking and job searching, the design of its hiring tools has evolved to optimize for speed and volume rather than fairness. This speed-focused model unintentionally amplifies inequities and erodes trust among job seekers. Frustrated candidates increasingly seek community and advice on anonymous forums such as Reddit, Glassdoor, and Blind, where the focus has shifted from applying for roles to sharing experiences and coping with systemic exclusion. This case study examines how the introduction of an anonymized first review process could rebuild confidence, reduce bias, and reposition LinkedIn as a platform that prioritizes equity in hiring.

Research began with a qualitative audit of over three hundred posts across LinkedIn, Reddit, Glassdoor, and Blind. These discussions provided unfiltered accounts of the obstacles faced during job searches. Themes were synthesized into six meta insights that were used to guide design requirements. Personas such as Lina, a caregiving UX designer, and David, a senior product manager navigating age bias, illustrated how structural issues influence application decisions. These insights shaped three interrelated design streams: messaging within job posts to set expectations, an anonymized applicant experience, and a redesigned recruiter dashboard. Wireframes and flows were iterated through peer review with diversity and inclusion advocates and hiring professionals. The reviews informed adjustments to the tone of messaging, the visual systems that signal anonymity, and the mechanics for when candidate details are revealed. The approach emphasized fairness first, balancing the needs of recruiters for clarity with the need of applicants for psychological safety. The prototype was developed as a connected set of screens and rules that structured the hiring journey. It defined how jobs that support anonymous reviews are surfaced, how candidate data is concealed and then progressively disclosed, and how oversight and support flows intervene at key points in the process. Every interaction was designed to encourage unbiased assessment during the earliest and most vulnerable stage of the application review.

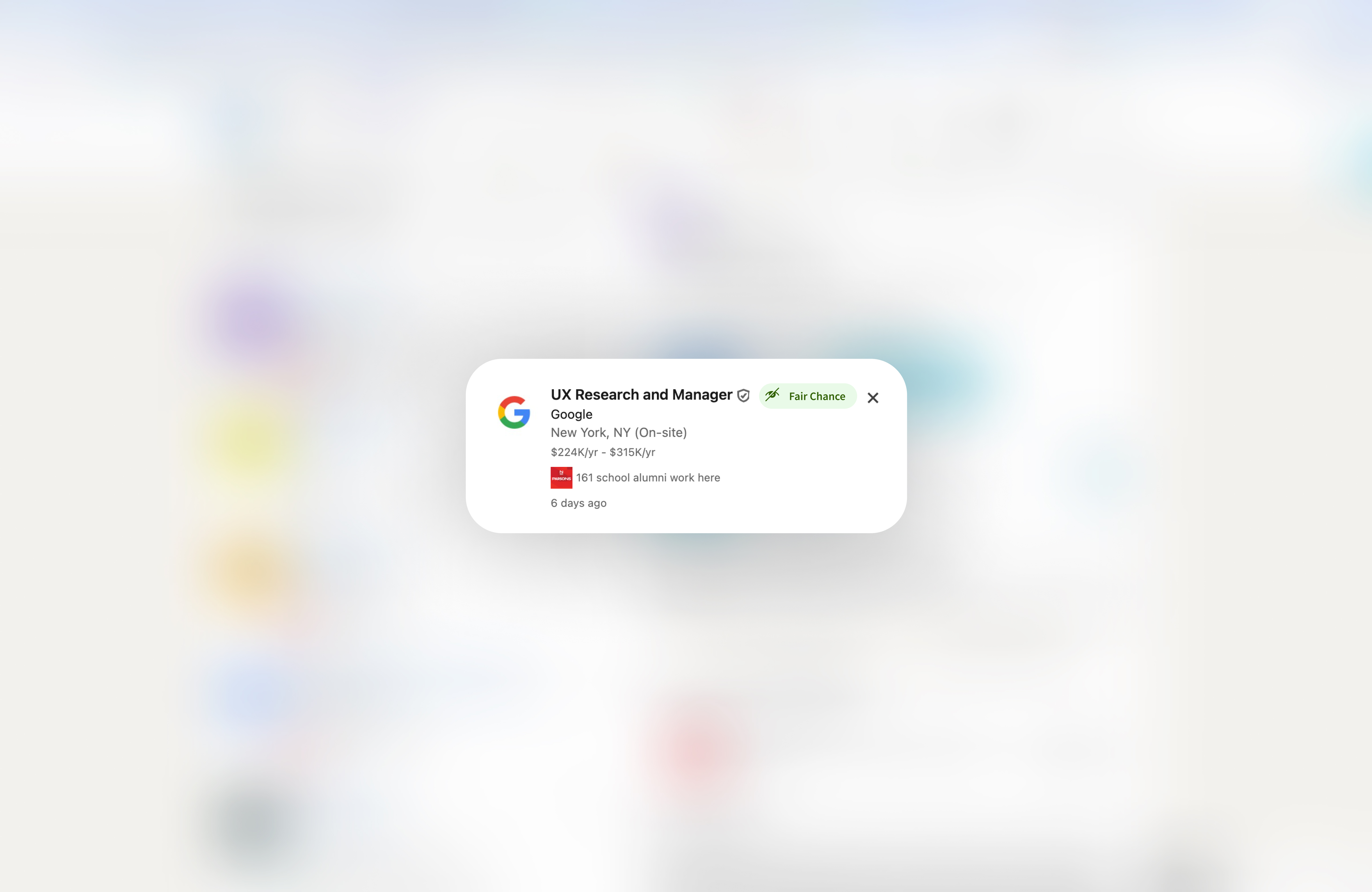

The resulting system reframes early stage hiring interactions on LinkedIn. Job postings include a clear visual badge and supporting explanation that indicate that the first round of review will be anonymous. This simple signal establishes a baseline of trust before an application is even started. The applicant flow removes personal identifiers including names, photographs, and exact dates of employment and instead introduces experience bands that communicate a general level of expertise. This change reduces the disadvantage of unconventional or non-linear career paths. On the recruiter side, a redesigned dashboard structures the evaluation process so that decisions are made based only on relevant skills and experience in the first round. Personal details become visible only after a candidate is shortlisted, reducing the influence of bias on initial filtering. Integrated help content and oversight prompts guide recruiters on how to use the new process effectively. Beyond improving the fairness of individual hiring decisions, the prototype demonstrates how product design can actively reduce exclusion in large scale systems. By making fairness visible and by structuring the process to support it, this work positions LinkedIn as more than a transactional job platform. It becomes a trusted environment that actively protects the integrity of opportunity and addresses the growing anxiety that surrounds modern hiring.